Worldwide thirst for data crunching is going through the roof. According to Cisco, it will roughly triple from 2015-2020. Gaming, Facebooking, Netflix binges; they are increasing consumer computing, but about 70 percent is still corporate computing. Annual global data center IP traffic will shoot up to 15.3 zettabytes up from 4.7 zettabytes. What is a zettabyte? I’m afraid to ask.

In the U.S., data centers are using massive amounts of electricity — more than 70 billion kWh per year. But despite the huge increase in digital processing, electricity consumption increases have begun to flatten, going from 90 percent in the first five years of the century, to 25 percent from 2005-2010 and then just 4 percent by 2015. That’s because data centers have been tackling their energy use and making progress. They may be poised to make further advances. Up to half of the energy used by a data center has been for cooling, so cooling has been receiving plenty of attention.

New cooling ideas

By the time the U.S. government started pointing out that about 2 percent – 4 percent of the country’s power was going to data centers, the biggest users — Google, Apple, Facebook, Microsoft and eBay — were already working on their energy footprints.

At first, they sought cleaner energy, adding solar panels to data center roofs and buying electricity from wind farms. Later, they moved some data processing to northern places like Scandinavia and Canada, put them outside in cage-like structures and took advantage of what they called ‘free-cooling,’ sometimes using air handlers. This could only solve some of the energy challenges for the world’s biggest users, and it didn’t really apply to thousands of smaller corporate data centers around the world, which often have strong arguments for locating their processing facilities near head offices and key population centers.

The experimentation has continued. Regular data center managers have been addressing industry oversizing habits developed in response to concerns about hot spots and redundancy. They have been pursuing hot aisle and cold aisle containment, air-flow accessories, server racks, evaporative cooling and data center infrastructure management (DCIM) software.

Google tried sea-water cooling in Finland to reduce reliance on local power plants, Apple added LED lighting and geothermal, and Microsoft actually enclosed a bunch of servers in a watertight tank and dropped it to the ocean floor. All of these efforts helped, but there is a real game changer on the horizon.

It’s the classic story of the inventor: some people came up with a new idea and were laughed off the planet. Then about a decade later the same innovators are poised to make it big.

That’s what is happening with data center cooling. Innovative solutions were presented at IT conferences shortly after the Y2K crisis evaporated, and were not taken very seriously. Granted, what they were proposing was pretty counter-intuitive. The fastest way to cool down a hot computer is to throw it into a bathtub, but most people would point out that conventional wisdom says water and electronics don’t mix. On the other hand, as many engineers know, liquid is exponentially better at removing heat than air-cooling.

In addition to the consumer driven and corporate digital booms already mentioned, we are now quickly advancing into the high-performance computing era, characterized by the huge demands of exascale supercomputers for scientific research, cloud computing, big data and artificial intelligence. Thus, we will triple demand fast.

Liquid cooled mainframes

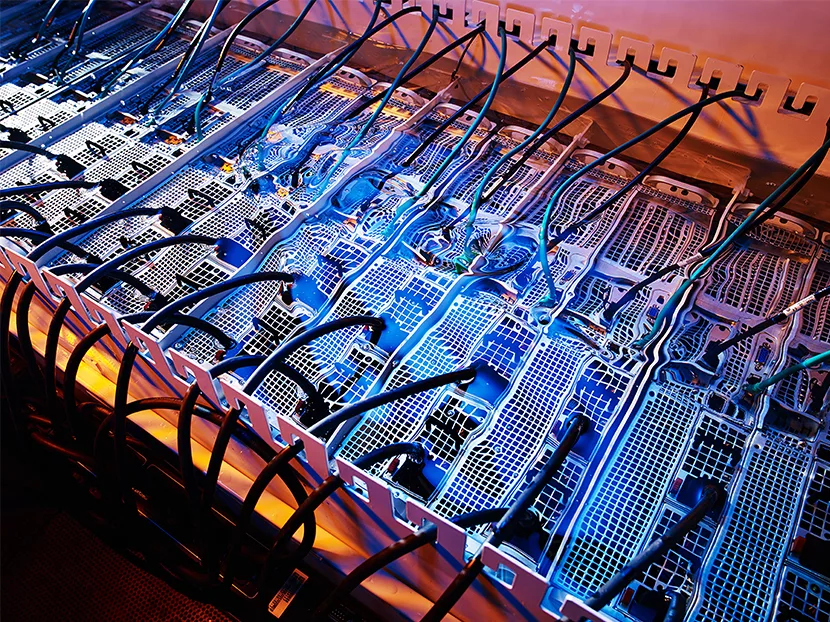

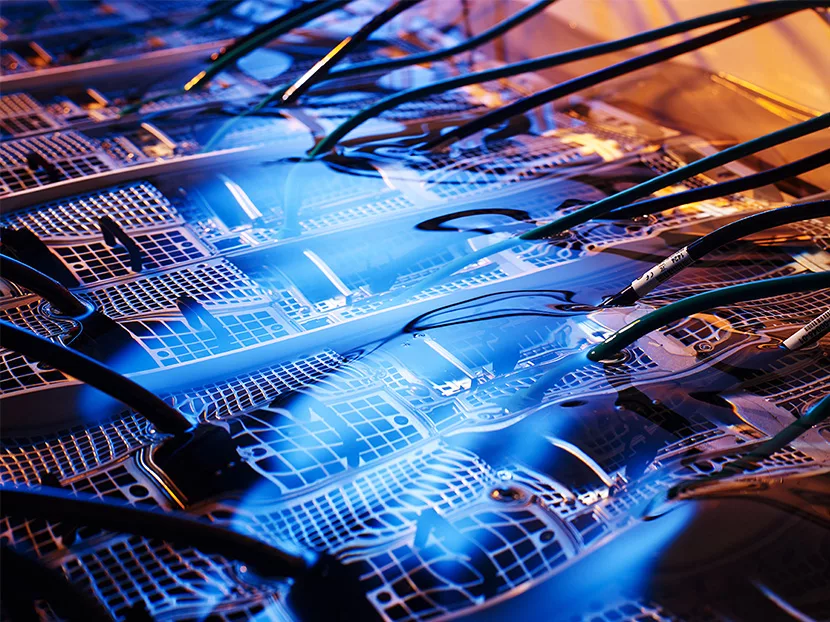

“Our liquid has about 1,200 times the heat absorbing capacity of air cooling,” says Brandon Moore of Green Revolution Cooling in Austin. He talks about a research university in Texas where his company has installed a completely different kind of data center. Rather than putting servers in tanks and dropping them into water like Microsoft, GRC puts the liquid inside the tanks, completely submersing the electronic components.

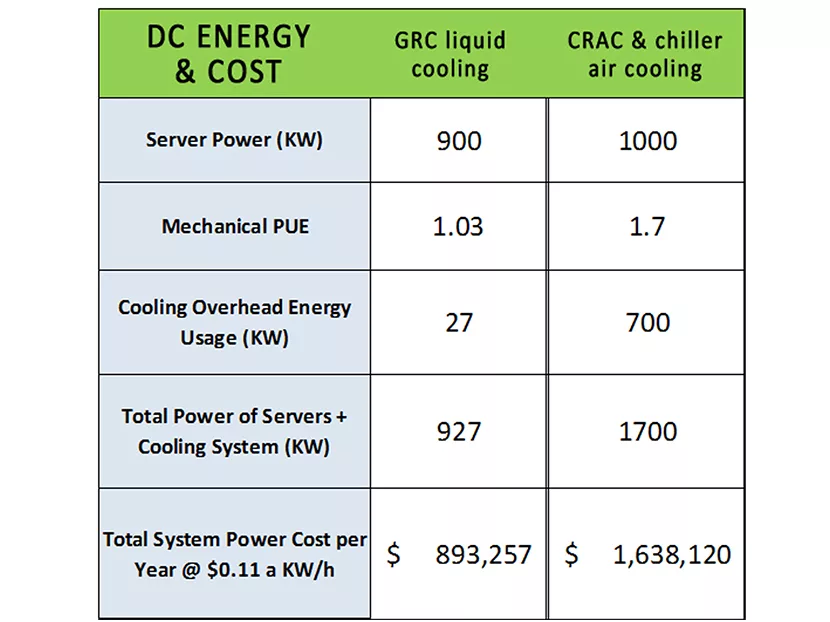

“It’s dielectric, a nonconductive synthetic mineral oil blend called ElectroSafe … There are no server fans, and it exchanges to a water loop,” he explains, meaning the heat energy moves from the oil through a heat exchanger to a water loop that can be as warm as 110 F, making modest demands on an evaporative cooling tower. “It’s a lot less expensive. There is a 90 percent reduction in energy use. The PUE (power use efficiency) is 1.03 or 1.05, compared to 1.7 for air cooled.”

In addition to 90 percent less cooling energy, the project reduced the electricity required for the servers by 20 percent and cut data center construction costs by about 60 percent. The company has now completed installations around the country and also in Japan, Australia, Canada, the UK, Spain, Austria and Costa Rica.

Liquid cooling may start to catch on, says Lucas Beran of IHT Markit, a research company. “It could become a big movement because it’s a good way to cool servers. You want to do your cooling as close to the actual servers as possible, rather than cooling the whole room. It’s much more efficient that way.” His company has studied the cooling journey of the IT industry. He points out that there are also efficiencies being found in the electricity systems powering the servers themselves, such as innovations with cable feeds from utilities and backup power systems, but he acknowledges that many recent wins have come in the area of cooling.

Data center veteran Julius Neudorfer of North American Access Technologies Inc. in Hawthorne, New York co-authored The Green Grid’s Liquid Cooling Technology Update white paper, which dispels the myths and misconceptions about liquid cooling. He tries to counter what he calls ‘data center hydrophobia’ in webinars on liquid cooling. He lists the benefits of liquid cooling as power savings, reduced copper losses, elimination of chillers, more compact equipment, space savings, fewer fans and vibrations.

He notes that ASHRAE recommends data center operating temperatures below 80 F and that air cooling is exhausting at 85 F-125 F with a delta T of 40 F, while liquid supply is 36 F-125 F, and return is 105 F-145 F with a delta T of 20 F.

In addition, he says that liquid cooling permits higher density of processors. Densely packed IT systems achieve better performance for big data, and allow designers to increase total rack power from 20 kW to 100 kW or more. Air cooling can’t really get to 100 kW, whereas liquid cooling goes beyond 100 kW routinely and has been known to go beyond 200 kW.

Portable DCs

The compact and efficient combining of servers and cooling for liquid cooling led designers to also create a solution for other kinds of data center problems. They have introduced portable DCs inside of shipping containers. These can be moved by truck, rail or air and craned into position in remote places or added for emergency load enhancements.

“Our containerized data centers are great because you can rapidly deploy the container anywhere — in a parking lot or field. You just drop it, connect electrical power and a water feed to the cooling towers and turn it on. And because it’s a sealed unit, it can be put into almost any environment — hot, cold, sandy, dusty— it doesn’t matter. A helicopter could lift off right beside it without any negative effects. If it’s cold, as long as it’s turned on, it won’t freeze. It’s a very resilient solution.”

Dr. Jens Struckmeier of Cloud & Heat Technologies GmbH in Dresden, Germany designs portable data center solutions like GRC, as well as onsite DCs and upgrades for the biggest users on the planet and many smaller organizations too. His company’s sea container product houses six server cabinets and stores its hydraulics and cable management under an interior floor.

Using data center heat elsewhere

Many of the company’s projects realize dramatic energy savings, not only through liquid cooling, but also by using the energy removed from the data center for space heating in other areas of buildings. This was done recently in Frankfurt in a complex that included offices, conference rooms, hotel rooms and restaurants. To manage fluctuating demand, a heat storage tank is used.

“A plate heat exchanger acts as a transfer point and separates the heat source from the distribution side … a demand cooler is switched on when a certain return flow temperature limit is exceeded. There are two possibilities:

- Additional cooling by cold water: The return flow of the heating system is mixed with cold water by means of a valve circuit. For this purpose, a temperature of maximum 122 F is guaranteed. It should be noted that the cold water meets the requirements as cooling medium of the plate heat exchanger. To get cold water in a closed hydraulic cycle, the return flow, if too warm, can be cycled through a dry air/water heat exchanger that can be stationed outside. In most climates, the outside air temperature is sufficiently cold that no adiabatic cooling is necessary when properly dimensioned. Alternately, cold-water reservoirs could be utilized.

- Additional cooling by air: The server racks can be supplied with an integrated demand cooler. If the critical temperature is exceeded, the demand cooler is switched on. The entire electrical power is transferred to the room as heat energy. Room ventilation may be required to prevent an uncontrolled rise in room temperature.

This company might be on the leading edge, using the data center heat that might otherwise be wasted, and also helping smaller data center operators to reduce their energy use, carbon footprint and costs by sharing (“co-locating”) liquid cooled facilities. Struckmeier says, “We have been operating our own public cloud infrastructure in distributed data centers. The recently opened Frankfurt location is being equipped with the latest Intel technology and the innovative water-cooling system. Customers can book classical IaaS resources or Hosted Cloud services by booking complete server blades or racks. Thus, they do not need to set up their own cost-intensive data centers for compute-intensive jobs, such as big data analysis or Industry 4.0 applications.”

He describes an example project: “125 server racks on three floors equipped with a total of 75 percent CPUs, 20 percent GPUs, 3 percent HDD and 2 percent others…CPUs and GPUs (graphics cards) are water-cooled with a regulated output temperature of 140 F- 149 F. Transmitted via a heat exchanger to a heating circuit, approximately 135 F-145 F can be achieved. If the waste heat can be re-used, the servers do not have to be cooled additionally. Thus, energy of 95 percent of the IT equipment can be used. Five percent have to be air cooled.”

He says that, assuming that the data center achieves an annual capacity utilization of approximately 50 percent, then 160,000 Euros of heating costs and 320,000 Euros of cooling costs are being saved, for a total of about $580,000.

As strange as it may seem, it looks like liquid cooling of electronic components might actually be a thing. It might even be a solution for how the planet can manage energy during your endless gaming and Facebook or Netflix binges.